23-Step Core SEO audit Process to Boost Your Search Rankings

An SEO audit assesses the overall health of your website and is an important component of any successful Internet marketing strategy. While the concept is simple, a good SEO audit is anything but easy and simple. Analyzing the efficiency of your Web site and marketing efforts entails more than counting the number of visitors to your site over a given period. It’s about figuring out where each click came from and what percentage of visitors to your page converted to sales. It also identifies where your marketing strategy’s weak points are, what other opportunities are available to boost the number of your visitors, and if there is any competition that could jeopardize your marketing efforts.

This article will discuss important steps of the core SEO audit process. It will help you immensely when doing your first SEO audit. I’ll begin by strategizing on the basic ones and work my way up to the critical ones.

Table of Contents

What are your client’s top priorities?

Depending on the priorities, make a roadmap for your evaluation process. Identify the MUST FIX and NICE TO FIX errors/issues. You need to ensure that your goals are SMART based on your business objectives. It means your objective should be Specific, Measurable, Attainable, Relevant, and Timely.

What has already been achieved?

One of the important things to consider is what we already have done for the process of optimization? When you do an SEO Audit, you need to ask yourself how secure, fast, and easy to use the site is and how much content is related to what you want to be found on search engines?

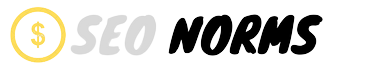

Analyze the SERP results before you start the optimization process

Don’t start with website optimization. Start with the SERP results. Go through at least 10 pages of SERP results for ranking keywords and try to understand how things are being indexed. Concentrate on the following:

- How are URLs showing up?

- How are title tags showing up?

- How do snippets look like?

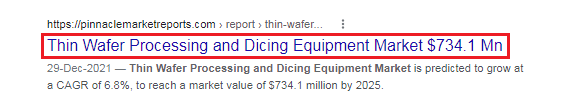

How well-optimized are the title tags?

A descriptive title indicates a web page’s relevancy to keyword search. Keep the length to a max of 50-55 characters or 600 pixels wide. Place keywords you want to optimize for at the beginning. One of the misconceptions about title tags is that Google measures them in character limits. Google measures title tags by pixels. So, I export my title tag data from Screaming Frog into Excel. I then changed the font type to Arial and used the font size 20px. I then set the Excel column width at 600 pixels as Google’s cut-off limit. Anything over 600 pixels is too long.

Here are the most important guidelines you should use for your title tags:

- Title tags should be 50 to 55 characters in length.

- Don’t use the same title tag on multiple pages.

- Use the keyword of that page twice in the title if space permits. Use the keyword at the beginning, followed by a separator, and then again in the call to action.

- If relevant, include a geo-qualifier.

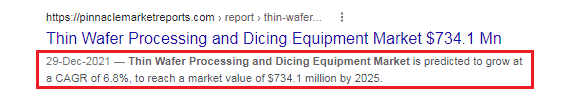

What about the Meta Description tags?

The Meta description is a short and accurate summary of your page content. It can affect the Click Through Rate (CTR) of the search results. The length should be no more than 150 to160 characters. The biggest mistake with the Meta description tag is stuffing target keywords in them. It would help if you have keywords in your description, but they should also read well. The more attractive your meta description is, the more likely searchers will click on the search result.

Here are some guidelines for creating Meta Descriptions:

- Make sure they are unique and relevant to your page.

- They should be written as descriptive text with a call to action.

- No more than 160 characters in length, including spaces and punctuation (140-150 is ideal), but no fewer than 51 characters.

- It should contain 1-2 complete sentences with correct punctuation and no more than 5 commas.

- Use the keyword once per sentence, as close to the start of each sentence as possible.

- Include a geo-qualifier if relevant.

Are the targeted pages optimized for the primary keywords?

Are your web pages optimized for the keywords / keyphrases? With the Title tag, Meta Description, and Meta keywords data, you can understand what a website is trying to rank. Combining that data with your Google Analytics keyword data allows you to see how a website gets traffic.

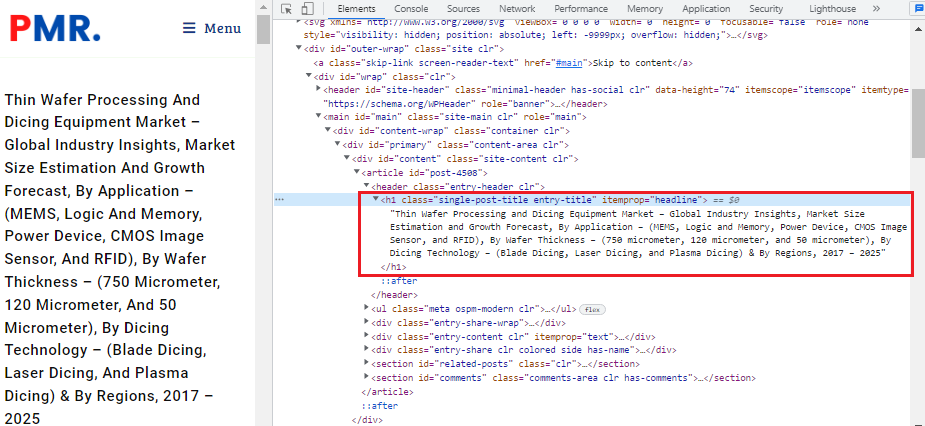

Make proper use of H1 and H2 headings tags

Headings Create a good content hierarchy within your pages and help define sections/topics and keywords. Using headings, you’re telling visitors and search engines that the text on the page that follows is related to the terms in your heading in a meaningful way. H1 & H2 headings help in the navigation of sub-topics. They are less important than title and meta, but they can still help search engines to find you.

- The most important heading on the page should be the H1.

- There is usually only one H1 on any page.

- Sub-headings should be H2’s, sub-sub-headings should be H3’s, etc.

- Each heading should contain valuable keywords; if not, it’s a wasted heading.

- In longer pieces of content, a heading is what helps a reader skip to the parts that they find interesting.

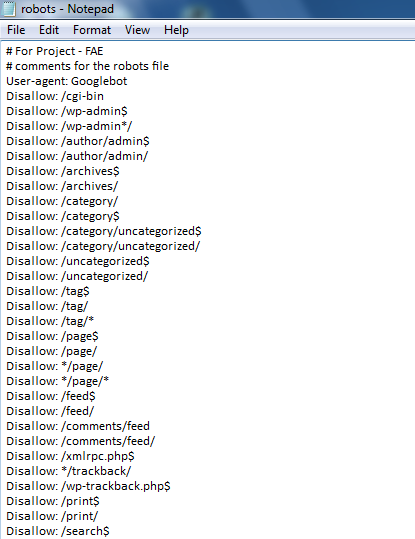

The significance of the robots.txt file

It is a text file that stops web crawler software, such as Googlebot, from crawling certain pages of your site. The file lists commands, such as Allow and Disallow, telling web crawlers which URLs they can or cannot retrieve. So, if a URL is disallowed in your robots.txt, that URL and its contents won’t appear in Google Search results.

Fixing broken links can significantly boost your rankings

Having lots of broken or dead links can indicate a site that is not managed well or not of high quality. Fixing these can increase page rank significantly. You can use Screaming Frog SEO Spider tool to discover all the broken images and broken links within a website. Google Search Console can find external links pointing to pages that no longer exist and generate 404 errors. Because Google and other search engines like BING crawl the web link-to-link, broken links can cause SEO-related problems for a website. When Google crawls a site and hits a broken link, the crawler immediately leaves the website. If the Google crawler encounters too many broken links on a site, it concludes that the site has a poor user experience, which can cause a reduced crawl rate/depth and indexing and ranking problems.

Unfortunately, broken links can also happen because someone outside your site links in incorrectly. While you can’t avoid these broken links, you can easily fix them with a 301 redirect.

To avoid user and search engine problems, you should routinely check Google Search Console and Bing Webmaster Tool for crawl errors and run a tool like XENU. Link Sleuth on your site to ensure there are no broken crawlable links.

If broken links are discovered, you need to implement a 301 redirect as per the guidelines given in the URL Redirect section. You should also use your Google Search Console account to check for broken links that Google has found on your site.

Consider Domain Authority (DA) and Page Authority (PA)

Page Authority is scored based on the 100-point logarithmic scale. Thus, it’s easier to grow your authority score from 20 to 30 than 70 to 80.

Whereas the Page Authority (PA) measures the probable ranking strength of a single page, Domain Authority (DA) measures the strength of entire domains or subdomains. The concept is the same for metrics such as MozRank and MozTrust.

When researching the search results, it’s best to use Page Authority (PA) and Domain Authority (DA) as comparative metrics and determine which sites/pages may have more powerful/important link profiles than others. More specific metrics like MozRank can answer questions of raw link popularity, and link counts can show the quantities of pages/sites linking to the site. These are high-level metrics that attempt to answer the question, “How strong are this page’s/site’s links in terms of helping them rank for queries in Google.com?”

Include your keywords in the image’s “alt” attributes

These attributes are like Meta descriptions for images. If an image is displayed incorrectly, the alt attribute will provide alternative info. It’s good practice to include your keywords and humanized descriptions or captions so that search engines can interpret their meaning. Every image must have an ‘alt’ tag for the benefit of search engines, code compliance, and visually challenged users. The ‘alt’ tag should describe the image appropriately and include a keyword relevant to your website (but only if the keyword is relevant to the image). Instead of integers or query strings, image file names should be descriptive words. They should accurately describe the image and, if applicable, include the keyword in their description. When an image is used as a link, the ‘alt’ tag replaces the anchor text. The structure of a linked image should be as follows:

<a href=”http://www.thetargeturl.com/”><img src=”http://www.yourdomain.com/images/keyword-rich-image-name.jpg” alt=”Describe the Image and use a keyword if relevant” /></a>By ensuring that all images are properly named and tagged, you will increase the SEO value of those images and increase the likelihood of receiving referral traffic from image search results.

All images should also specify height and width in the tag for code compliance reasons.

Manage page warnings

If your site has been hacked or displays any dodgy code on any part of your site, your site will stop being indexed by Google, and a warning page will display any time a user clicks on your link from Google Search. The best option is to use Google Search Console to identify and fix this to find your site again.

Internal linking makes it easier for search engine crawlers to crawl the entire website

Both Google Search Console and Screaming Frog will give you data on internal links. When relevant, the more you link within your site, the easier it will be for search engine crawlers to crawl the entire website. It is recommended not to have more than 100 links on a page, so you ideally want to stay under this number. Sometimes you won’t be able to, which is fine, but try to stay under that limit.

As for anchor text, you would also want to look at all of the anchor text when linking internally. Avoid using keyword-rich anchor text all the time. Naturally, it would help if you also used link text like “click here” or “learn more.” Having a lot of keyword-rich anchor text may bring your rankings down a few slots.

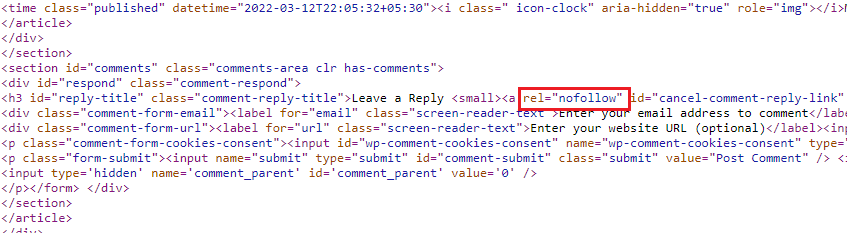

When should you use Nofollow links?

Google measures how different pages link together and assigns a value to those links based on traffic, relevancy, age, size, content, and hundreds of other components. When pages that Google seems the relevant link to other pages, some of that “link juice” flows through that link to the site being linked by the page. A “followed” link is used to endorse the page being linked.

Use the rel=" nofollow" tag.

Google introduced this tag to help preserve the relevancy of PageRank, which blog and forum comment spammers were hurting. When the tag rel=”nofollow” is included in an anchor tag, Google will usually pass less on no “link juice” to the linked page. Using this tag is like saying, “this page is nice, but we don’t endorse it.” It would help if you used nofollow tags on blog comments, site-wide external links, and any internal links pointing to low-quality pages.

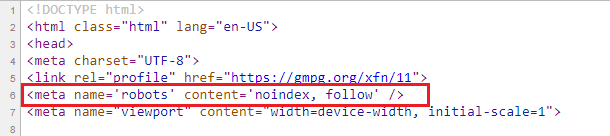

Page exclusions

Using the robots.txt file and adding noindex tags to pages you don’t want to be crawled is a simple approach to prevent them from being indexed. Because search engines dislike having hundreds of poor-quality pages with little to no content or duplicate content, you should eliminate such pages from the index. The simplest approach to handling this is to either remove them from your website or disable the crawling of specific pages. Sites with thousands of poor pages are penalized by Google’s latest updates. Make sure you don’t allow substandard pages to be indexed. Simply include the following code in your head: <meta name=”robots” content=”noindex”>

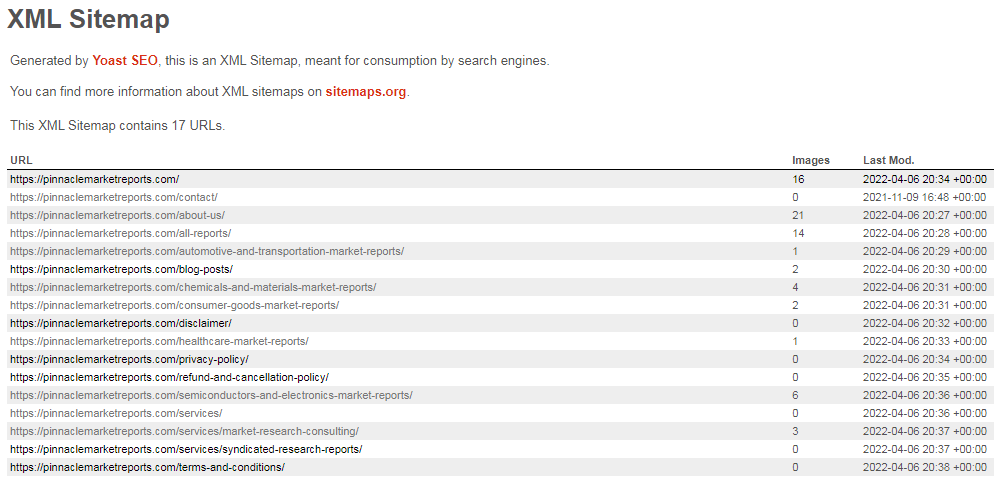

Page inclusions

By using a sitemap, you can improve the indexation of your website. You can generate both an HTML and an XML sitemap. You can upload your XML sitemap to Webmaster Tools, which will inform you how many of your URLs have been indexed. Even if you have a huge website, 100% of the URLs you provide will rarely be indexed. On the other hand, internal linking increases the chances of all of your pages being indexed.

Make appropriate URL redirects and monitor properly

You should never use 302 redirects unless the redirections are truly temporary (such as for time-sensitive offer pages). The 302 redirects are a dead-end because they don’t pass any link value. Webmasters should use 301 redirects in practically every situation where a redirect is required. A 301 permanent redirect is required for every website that changes URLs or is deleted to notify the search engines and users that the page has moved or is no longer available.

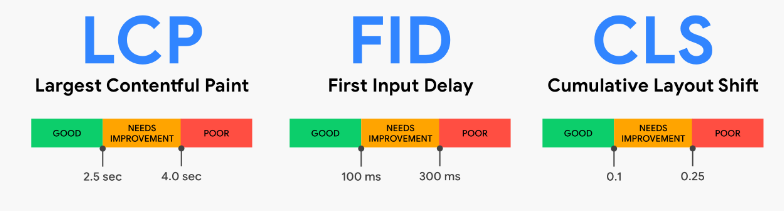

Improve page speed for faster page load times

Google recommends a page load time under 2 seconds. As a result, if your website loads faster, it should be alright. If it is slow, your ranks will not be as high as they can be.

If you’re unsure what your page load speed is, use Pingdom’s free speed test tool.

Once you know how long your site takes to load, you can usually speed it up by using browser caching, CSS minification, and shrinking image file sizes as much as possible.

By merging CSS and JavaScript files into fewer files and compressing and minifying file sizes, you can reduce the total number of CSS and JavaScript calls. Using a CDN (content delivery network) for your images may also be beneficial.

W3 Total Cache is a great WordPress plug-in that can help with page load speed issues and set up a simple CDN via Amazon AWS for very little money.

Following these steps can help you minimize the page’s high exit rates

- Is it easy for leads to contact you on landing pages?

- Is there enough information about your service on landing pages?

- Are you showing enough social proof on landing pages?

- What is the exit percentage? Anything over 80% is too high. 50-65% is normal.

- Does this page solve a problem or answer a question to the fullest extent?

- Are there still some questions left unanswered?

- How is the readability of the content?

- Are there too many big blocks of text?

- Too few images?

- Broken images?

- Does the page load slowly?

- Are there distracting elements such as advertisements that would send users off your site?

- Are you setting external links to “open in a new window”? (if not, you should)

- Social proof should appear on the home page and landing pages.

- Onsite sales can be increased by copywriting and videos.

Analyze the information retrieved from the Google Search Console

- To make sure that Google can access your content.

- Submit new content for crawling and remove content you don’t want to see.

- Maintain your site for minimum disruption to search performances.

- Monitor and resolve malware or spam issues so that your site stays clean.

- Which queries caused your site to appear in search results?

- Did some queries result in more traffic to your site than others?

- Are your product prices, company contact info, or events highlighted in rich search results?

- Which sites are linking to your website?

- Is your mobile site performing well for visitors searching on mobile?

Using Google Analytics, you can identify critical SEO factors

While not specifically a contributor to SEO, it’s a good idea to check the source code of your website if you are unsure whether or not you have Analytics code installed. It is an invaluable, free tool that will help you to analyze deep SEO factors within your site.

Check for URL canonicalization issues on your website

Test your site for URL canonicalization issues, www. Vs. non-www. If your domain chooses http://mysite.com and http://www.mysite.com, you need to choose one to redirect to the other. If no redirect is in place, they can be seen as duplicate domains by search engines and can create confusion about which one to index and rank.

Evaluate the XML Sitemap

Suppose your site is using a sitemap.xml or sitemap.xml.gz file. These files are the easiest way to tell search engines what to crawl and index on your website. It’s just a list of URLs with extra data, such as – the last update dates, importance, and how often it changes. Make sure your site has an XML sitemap created.

What should you do after an SEO audit?

Now that you can create a detailed SEO audit report, you’ll need to figure out what to do with it. Your role is to explain everything and how it pertains to your website and marketing success. The SEO audit provides you with a means for developing a new marketing strategy, and you should assist in developing that strategy. Although an SEO audit is not easy, it is one of the most critical things you can do for the health of your website and online business.