Should You Block Google From Crawling Internal Search Result Pages?

Table of Contents

Pros and cons of blocking Google Bots from crawling internal search result pages

Understanding the Importance of Blocking Google from Crawling Internal Search Result Pages

There are several factors to consider When it comes to whether website owners should block Google from crawling internal search result pages. Let’s dive into this practice’s pros and cons to understand its impact on SEO performance better.

The Pros of Blocking Google from Crawling Internal Search Result Pages

One of the main advantages of blocking Google from indexing internal search result pages is to prevent duplicate content issues. Internal search result pages often contain a mix of parameters that can generate numerous URL variations, leading to duplicate content problems. By blocking Google from crawling these pages, you can avoid diluting the SEO authority of your website.

Moreover, blocking internal search result pages can help you maintain a cleaner and more user-friendly sitemap. By excluding these pages from Google’s index, you can ensure that only your most relevant and valuable content is being prioritized for search engine visibility.

The Cons of Blocking Google from Crawling Internal Search Result Pages

On the flip side, blocking Google from accessing internal search result pages might prevent some of your website content from being discovered and indexed by the search engine. This could impact the overall visibility of your site in search results, especially if your internal search pages contain valuable content that could drive organic traffic.

Another drawback of blocking internal search result pages is the missed opportunity to leverage long-tail keywords and user-generated content that may be present on these pages. Allowing Google to crawl and index these pages could expand the keyword universe for your website and attract more targeted traffic.

2024 Google SERP Features: New Strategies To Gain Visibility. via @lorenbaker, @getSTAT: https://t.co/iZnWRupRge#Google #SEO

— SearchEngineJournal® (@sejournal) April 2, 2024

The decision to block Google from crawling internal search result pages depends on factors, including the size and complexity of your website, the nature of your internal search pages, and your overall SEO strategy. Measuring the pros and cons before implementing any changes is essential to ensure your website’s visibility and organic traffic are not unduly compromised.

Impact on SEO performance when Google indexes internal search result pages

When Google indexes internal search result pages on a website, it can have positive and negative implications for its SEO performance. On the one hand, indexing these pages can lead to more content appearing in search results and driving organic traffic to your website. This can be particularly beneficial if the internal search pages contain valuable and unique content for which users search.

However, there are also drawbacks to allowing Google to index internal search result pages. One major issue is that these pages often contain duplicate content that already exists elsewhere on the site. When Google indexes multiple pages with the same content, it can dilute the site’s overall SEO strength and confuse search engines about which page to rank for a particular query. This can lead to keyword cannibalization and decreased overall search visibility for the site.

Moreover, internal search result pages are not typically optimized for search engines in the same way that regular content pages are. They may lack meta tags, optimized headings, or proper internal linking structures, making them less valuable from an SEO perspective. Allowing Google to index these pages could potentially harm the site’s overall search performance if they are not appropriately optimized.

In addition, having internal search result pages indexed by Google can also result in a poor user experience. Users who land on these pages from search results may not find them useful or become frustrated when they cannot find the necessary information. This can lead to a high bounce rate and lower user engagement metrics, which can, in turn, negatively impact the site’s search rankings.

The decision to allow Google to index internal search result pages should be made carefully, measuring the potential benefits of increased visibility against the risks of keyword cannibalization, duplicate content issues, and poor user experience. Site owners should consider optimizing these pages for search engines and implementing proper indexing controls to ensure that only valuable, relevant content appears in search results.

Best practices for optimizing internal search result pages for search engines

Optimizing Internal Search Result Pages for Search Engines

When optimizing internal search result pages for search engines, several key best practices can help improve their visibility and ranking in search results. By following these guidelines, website owners can ensure that their internal search pages are effectively crawled and indexed by search engine bots, leading to increased organic traffic and better user experience.

Implement Clear and Descriptive URLs

One of the fundamental aspects of optimizing internal search result pages is to use clear and descriptive URLs. Including relevant keywords in the URLs can help search engines understand the page’s content and index it appropriately. Avoid using dynamic parameters in URLs and opt for static, keyword-rich URLs.

Create Unique and Compelling Meta Tags

Meta titles and descriptions are crucial in optimizing search engines. Ensure that each internal search result page has a unique and compelling meta title and description that accurately describes the page’s content. Including relevant keywords in meta tags can improve CTR (Click Rates) on search engine results pages.

Optimize On-Page Content

Optimizing the on-page content of internal search result pages is essential for better search engine visibility. Ensure that the content on these pages is relevant, informative, and engaging. Use headings, subheadings, and bullet points to make the content more scannable for users and search engines. Add relevant keywords naturally throughout the content to improve keyword relevancy.

Improve Internal Linking Structure

Internal linking is a critical aspect of SEO. Ensure that internal search result pages are linked from relevant website sections to enhance visibility. Use descriptive anchor text with relevant keywords to provide context for users and search engine crawlers.

Monitor Performance and Make Continuous Improvements

Regularly monitor the performance of internal search result pages in search engine results pages (SERPs) using tools like Google Search Console. Analyze key metrics such as click-through rates, impressions, and average positions to identify areas for improvement. Make continuous optimizations based on data insights to enhance the visibility and ranking of internal search pages.

By following these best practices for optimizing internal search result pages for search engines, website owners can improve their SEO performance, increase organic traffic, and provide a better user experience for visitors navigating internal search results.

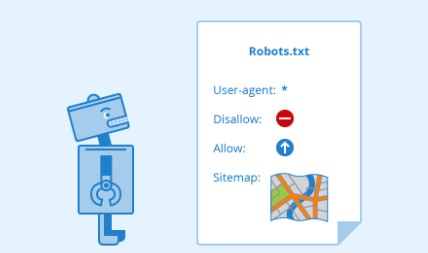

How to control Google’s access to internal search result pages using robots.txt

Controlling Google’s Access to Internal Search Result Pages

When managing how Google crawls and indexes your website’s internal search result pages, using the robots.txt file can be a powerful tool. By instructing search engine crawlers on which pages to index and which ones to ignore, you can have more control over your site’s search engine optimization (SEO) performance. Here’s how you can effectively control Google’s access to internal search result pages using the robots.txt file.

Understanding the robots.txt File

The robots.txt text file instructs web robots and search engine crawlers about which website areas should not be processed or scanned. It acts as a gatekeeper, controlling access to specific pages and directories on your site. By configuring the robots.txt file correctly, you can prevent search engines like Google from indexing certain parts of your website, including internal search result pages.

Blocking Internal Search Result Pages

To block Google from crawling and indexing internal search result pages, you can use the robots.txt file to disallow access to these pages. By adding specific directives to the robots.txt file, such as “Disallow: /search/,” you can effectively block search engine crawlers from accessing URLs that contain internal search results.

Example:

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-admin/admin-ajax.php

Sitemap: https://seonorms.com/sitemap.xml

Sitemap: https://seonorms.com/sitemap.rssImplementing Noindex Meta Tags

In addition to using the robots.txt file, another best practice is to include meta tags on internal search result pages that instruct search engines not to index them. By adding the “no index” meta tag to the HTML code of these pages, you can signal to search engine crawlers that they should not include these pages in their index.

Regularly Monitoring and Updating

It’s essential to regularly monitor and update your robots.txt file to ensure that it continues to control Google’s access to internal search result pages effectively. Reviewing and adjusting your robots.txt directives is crucial to maintaining optimal SEO performance as your website evolves and new pages are added.

By leveraging the robots.txt file and implementing other best practices like noindex meta tags, you can exert greater control over how Google crawls and indexes your website’s internal search result pages. This level of control can help improve your site’s SEO performance and ensure that only the most relevant and valuable content is visible to search engine users.

Factors to Consider when Deciding on Google’s Crawling of Internal Search Result Pages

Several crucial factors need to be considered when contemplating whether to allow Google to crawl internal search result pages on your website. These factors can significantly impact your site’s SEO performance and overall user experience.

Impact on User Experience

One of the primary considerations when determining whether to block Google from crawling internal search result pages is the impact on user experience. Indexed internal search pages may lead users to irrelevant search results within your website.

Effect on Site Speed

Allowing Google to crawl and index internal search result pages can increase the overall size of your website and potentially slow download times. This could harm user experience, as visitors may be less inclined to remain on your site if pages take too long to load.

Duplicate Content Issues

Indexing internal search result pages can also lead to problems with duplicate content. When Google indexes multiple search result pages with similar content, it may not know which page to prioritize in search results, potentially diluting the SEO value of your primary pages.

SEO Performance and Index Bloat

Having internal search result pages indexed can result in index bloat, where Google indexes many low-quality pages. This can negatively impact your site’s overall SEO performance, as search engines may view the abundance of indexed search result pages as less valuable content.

Robots.txt Configuration

To prevent Google from crawling internal search result pages, you can utilize the robots.txt file on your website. By blocking specific URLs that lead to search results, you can control which pages Google indexes and improve the overall quality of content that appears in search engine results.

The decision to block Google from crawling internal search result pages should be based on carefully considering these factors. By prioritizing user experience, site speed, and SEO performance, you can make an informed decision that aligns with your website’s goals and objectives. By implementing best practices for optimizing internal search result pages and controlling Google’s access through robots.txt, you can effectively manage how search engines interact with your site’s search functionality.

Key Takeaway:

When considering whether to block Google from crawling internal search result pages, carefully measure the pros and cons. While blocking these pages can prevent duplicate content issues and preserve the crawl budget for more valuable pages, it may also hinder the indexation of potentially relevant content. The impact on SEO performance is significant, as indexed internal search result pages can dilute overall rankings and visibility. To optimize internal search result pages for search engines, ensure unique and valuable content, implement structured data markup, and prioritize user experience. Controlling Google’s access to these pages through robots.txt provides a flexible solution, allowing for selective indexing. Other factors to consider include the intent behind the content, user behavior on internal search result pages, and the overall impact on site visibility and authority. Ultimately, a strategic approach that aligns with SEO goals and user needs is crucial in determining whether to block Google from crawling internal search result pages.

Conclusion

In measuring the pros and cons of blocking Google from crawling internal search result pages, it is evident that there are valid arguments on both sides. By allowing Google to index these pages, websites can gain more visibility and traffic, positively impacting SEO performance. However, there is also the risk of diluting the overall quality of the website’s search results and potentially causing duplicate content issues.

The decision to index internal search result pages can significantly impact SEO performance. While indexed search result pages may increase the number of indexed pages on a website and improve organic search visibility, it may also lead to duplicate content issues, keyword cannibalization, and a dilution of link equity across multiple pages. This can impact the overall search engine rankings and visibility of the website.

To optimize internal search result pages for search engines, it is essential to follow best practices such as ensuring that search result pages have unique and valuable content, implementing pagination to enhance user experience and crawlability, optimizing meta tags and headings for relevant keywords, and using schema-markup to provide search engines with context about the content on the page. By implementing these practices, websites can improve the visibility and relevance of their internal search result pages in search engine results.

Controlling Google’s access to internal search result pages can be achieved using the robots.txt file. By disallowing Googlebot from crawling specific search result pages, web admins can prevent these pages from being indexed in Google’s search results. However, it is essential to exercise caution when using robots.txt directives to block search result pages, as this can potentially impact the overall crawlability and indexation of the website if not implemented correctly.

When deciding whether to block Google from crawling internal search result pages, web admins should consider other factors such as the quality and relevance of the internal search result pages, the potential impact on user UX (User Experience) and website performance, the overall SEO strategy and objectives of the website, and the resources available for optimizing search result pages. It is essential to weigh the potential benefits and drawbacks of blocking Google from crawling internal search result pages to make an informed decision that aligns with the website’s goals and objectives.

Understanding the implications of blocking Google from crawling internal search result pages and implementing best practices for optimizing them can help websites improve their SEO performance and overall visibility in search engine results. By carefully evaluating the pros and cons, implementing best practices, and considering other relevant factors, webmasters can make informed decisions to effectively manage Google’s access to internal search result pages and maximize the impact on their website’s SEO performance.